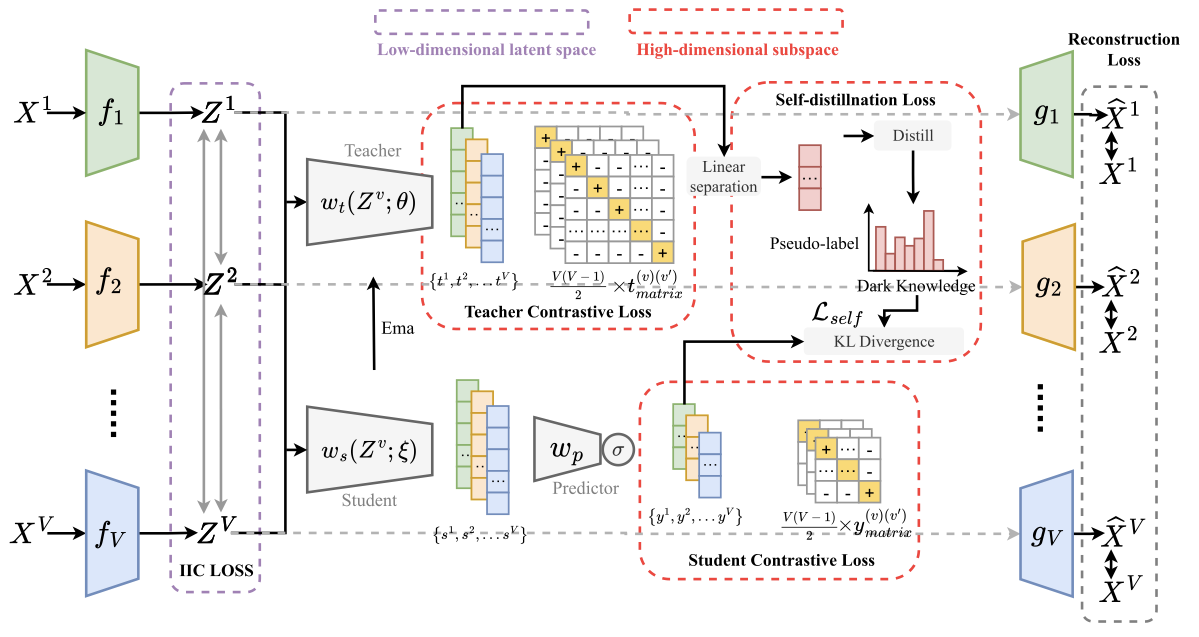

Toward Generalized Multistage Clustering: Multiview Self-Distillation

Published in TNNLS, 2024

DistilMVC is a multi-stage deep multi-view clustering framework that leverages multi-view self-distillation—using a teacher-student model to distill dark knowledge of pseudo-label distributions—combined with contrastive learning and mutual information maximization across views to correct overconfident pseudo-labels, yielding state-of-the-art clustering performance on real-world scenario.

Recommended citation: Wang J, Xu Z, Wang X, Li T. Toward Generalized Multistage Clustering: Multiview Self-Distillation. IEEE Transactions on Neural Networks and Learning Systems. 2024 Nov 11.

Download Paper | Download Bibtex | Code